Kalman Filtering

Dynamic Linear Models have a linear Gaussian latent-state and observation model which is amenable to exact filtering because of special properties of the Gaussian distribution. This means the distribution of the latent-state (\(p(\textbf{x}_{0:T}|y_{1:T}, \theta)\)) can be learned about exactly, this distribution is commonly called the filtering distribution. Suppose a time-dependent process is observed discretely at times \(t = 1,\dots,T\), then a general DLM for this process can be written as:

\[\begin{aligned} y_t &= F_t x_t + v_t, &v_t &\sim \mathcal{N}(0, V), \\ x_t &= G_t x_{t-1} + v_t, &w_t &\sim \mathcal{N}(0, W), \\ x_0 &\sim \mathcal{N}(m_0, C_0). \end{aligned}\]

The latent-state forms a Markov Chain, \(p(x_t|x_{0:t-1},W) = p(x_t|x_{t-1},W)\) and the observations are conditionally independent given the corresponding value of the latent-state. The matrix \(F_t\) is known as the observation matrix, the matrix \(G_t\) is the state evolution matrix. \(V\) is the measurement variance and \(W\) is the system evolution noise.

The Kalman filter proceeds as follows, given observations of the process up until time \(t\), \(y_{1:t}\) and the posterior of the latent-state at time \(t\), then:

Prior at time \(t + 1\) is \(\mathcal{N}(a_t, R_t)\) where \(a_t = G_t m_t\) and \(R_t = G_t C_t G_t^T + W_t\)

One step prediction for time \(t + 1\) is \(\mathcal{N}(f_t, Q_t)\) where \(f_t = F_t a_t\) and \(Q_t = F_t R_t F_t^T + V_t\)

State update given the new observation, \(y_{t+1}\) is \(\mathcal{N}(m_{t+1}, C_{t+1})\) where \(m_{t+1} = a_t + K_t e_t\), \(C_t = (I - K_t * F_t)R_t(I - K_t * F_t) + K_tV_tK_t\) and \(K_t = R_t * F_t^T * Q^{-1}\), \(e_t = y_t - f_t\)

First Order Example

In order to illustrate the Kalman Filter implementation in Scala, we consider a first order polynomial DLM, where \(F_t = 1\), \(G_t = 1\) and the latent-state is one-dimensional:

import com.github.jonnylaw.dlm._

import breeze.linalg.{DenseMatrix, DenseVector}

val mod = Dlm.polynomial(1)

val p = DlmParameters(

v = DenseMatrix(3.0),

w = DenseMatrix(1.0),

m0 = DenseVector(0.0),

c0 = DenseMatrix(1.0)

)

val data = Dlm.simulateRegular(mod, p, 1.0).

steps.

take(1000).

toVector- The prior at time \(t + 1\) is implemented in the

KalmanFilterobject asadvanceState

val mt = // posterior mean of latent-state at time t

val ct = // posterior covariance of latent-state at time t

val time = t

val (at, rt) = KalmanFilter.advanceState(mod.g, mt, ct, time, p.w)- One step prediction is implemented in the

KalmanFilterobject asoneStepPrediction

val (ft, qt) = KalmanFilter.oneStepPrediction(mod.f, at, rt, time, p.v)- State update is implemented in the

KalmanFilterobject asupdateState

val y = Dlm.Data(t + 1, Some(DenseVector(5.0))) // an observation at time t + 1

val (mt1, ct1) = KalmanFilter.updateState(mod.f, at, rt, ft, qt, y, p.v)These three steps are implemented together into a single function stepKalmanFilter. This function is then used to fold over an Array[Dlm.Data] to determine the filtering distribution:

import cats.implicits._

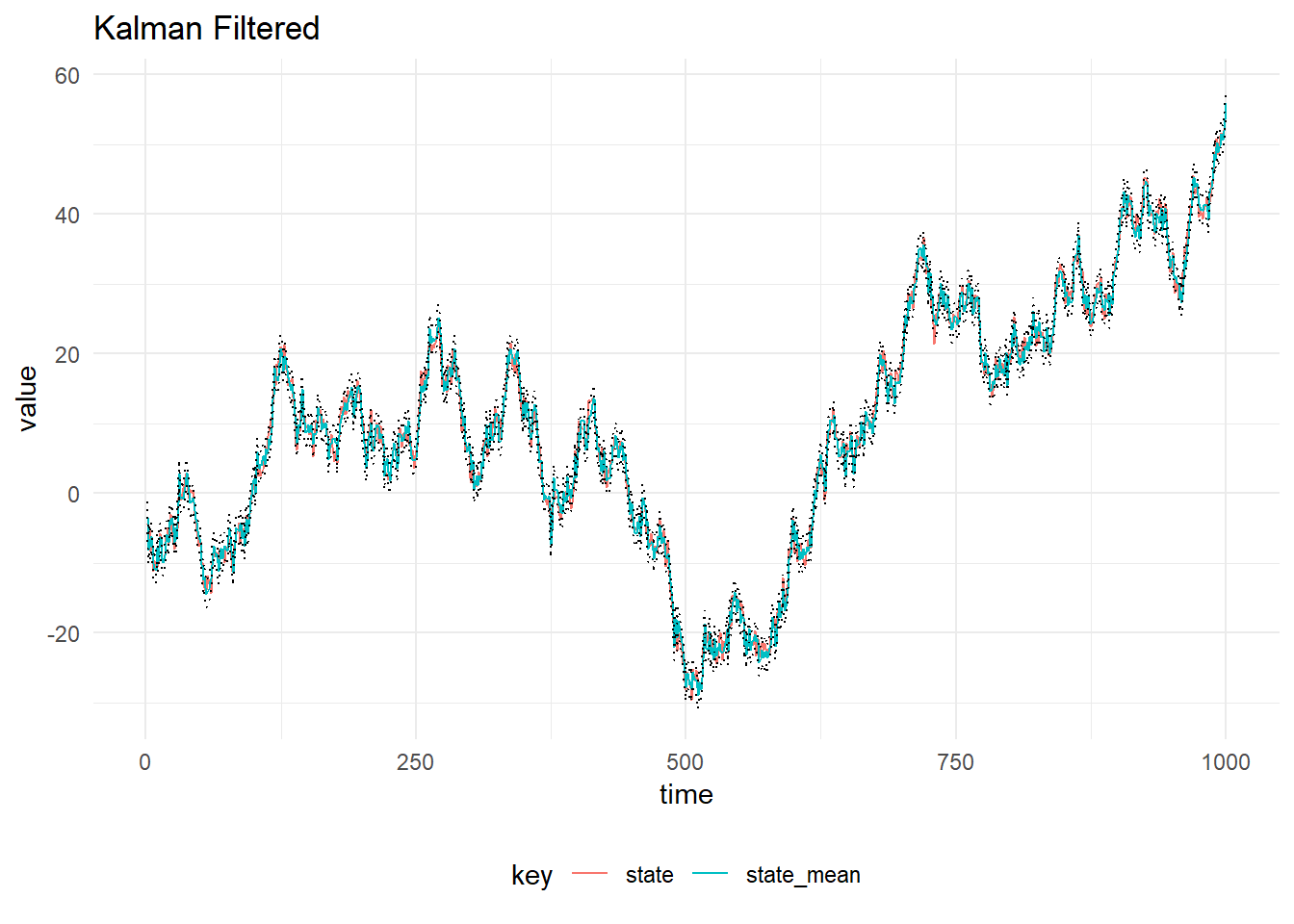

val filtered = KalmanFilter.filterDlm(mod, data.map(_._1), p)The result of filtering the simulated observations is plotted below, with 90% probability intervals. The system and observation variances, \(W_t\) and \(V_t\) are assumed to be constant in time and known.

Filter Stability

Numerical stability is important when implementing the Kalman Filter. One way of improving the stability of the filter is to use a square root filter, a further improvement is found by using the Singular Value Decomposition as detailed in https://arxiv.org/abs/1611.03686.

In order to run Singular Value Decomposition filter use:

val filtered = SvdFilter.filterDlm(mod, data.map(_._1), p)Other Filters

- Particle Filter

- Auxiliary Particle Filter

- Particle Gibbs

- Particle Gibbs with Ancestor Resampling